RustDesk: Moving Beyond TeamViewer

There was a time when TeamViewer was the go-to tool for remote access and IT support. It was easy to use, widely available, and—let’s be honest—one of the only decent options out there. But times have changed, and so have my feelings about it.

Now? I loathe it. Between the constant connection issues, the aggressive licensing nags, and the ever-growing list of security concerns, TeamViewer has gone from a useful tool to a never-ending headache. So that begs the question… what will take it’s place?

Over the past few years, I’ve tinkered with other remote access options, usually landing on Apache Guacamole as my favorite. However, it’s not as versatile for ad-hoc connections, and sometimes it’s just buggy. Like printers, Guacamole can usually smell the stress and tell when something is important or time sensitive, and it usually picks those times to have trouble connecting.

Thus, the search for a solid remote access tool has led me on a hunt for a tool that checks all these boxes:

easy to set up and maintain

can provide unattended access

requires MFA

can be used on-prem or off-prem

open source

cross platform

useful for remote support in addition to remote access

The winner of this search?

RustDesk

RustDesk checks off all the elements of my criteria, with the added bonus that it’s self-hosted. Let’s get started!

Download and Install the RustDesk Client

To start out, select a computer you want to access remotely, and a computer you want to use to access that computer. On each one, download the latest stable RustDesk client from the official RustDesk Github repo at rustdesk/rustdesk (https://github.com/rustdesk/rustdesk/releases/).

Once you have the clients downloaded and installed, the next question to ask yourself is…

Cloud or On-Prem?

There are several ways you can do this, but for my solution, I’ll be hosting the RustDesk Signaling Server and Relay Server in a public cloud virtual private server on Linode. If you haven’t used Linode before, score a $100 credit from my referral link here. If you don’t want to go this route, you can install on a local server and set up port forwarding so it can be accessible from the internet. I haven’t tested it yet, but in theory, I believe the same result should be possible using a Cloudflare tunnel as described in our previous article here. Additionally, if you only want to access it from your LAN, you can just spin up the server on your network. The steps for that are similar, just on a local server instead of in the cloud. Linode, however, is probably the quickest and easiest way to get this project up and running. In terms of cost, it’s not too bad because we can use the cheapest Linode VPS that’s $5/month.

BILLING NOTE: With Linode, even if you power off the server, monthly billing will continue until the machine is deleted.

Setting up a Cloud Server

In Linode, click on +Create and select Linode:

On the Create screen, select a region close to you, select the most recent LTS version of Ubuntu Linux, and select the $5/month Nanode from the Shared CPU tab. Depending on the size of your deployment and number of users, you may need to upgrade to a more robust plan, but this is a great place to start.

Next, name your server and add any tags if you’d like, then set the root password. Be sure to make a note of this.

Then, click “Create Linode”

It will take a few minutes to spin up the virtual machine. Once it does, we’ll be installing Docker.

Connecting to Your Server

Once your server is Running in Linode, your Public IP Address will be displayed. Make a note of your IP… mine is 45.79.200.147 (don’t worry… this machine will be deleted before I publish the article). Next, open the terminal on your computer and connect to the VM via SSH using this command:

ssh root@45.79.200.147You’ll then be prompted to continue connecting, and then prompted to enter your root password:

Next, run the following command to update your server and repositories:

sudo apt update && sudo apt upgrade -yDocker Install

Now that you’re at the command line of your new, freshly-updated server, we’re going to install Docker. Docker is a platform that allows you to load pre-bundled applications into a nice, tidy package called a container that has all the resources necessary for the application to run.

First, go to the Docker Install page for Ubuntu found here (or copy the code below) to set up Docker’s apt repository:

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get updatePaste that block into your server’s terminal and hit enter, and it will do its thing:

Next, you’ll copy and paste this command (also from the Docker Ubuntu install page linked above):

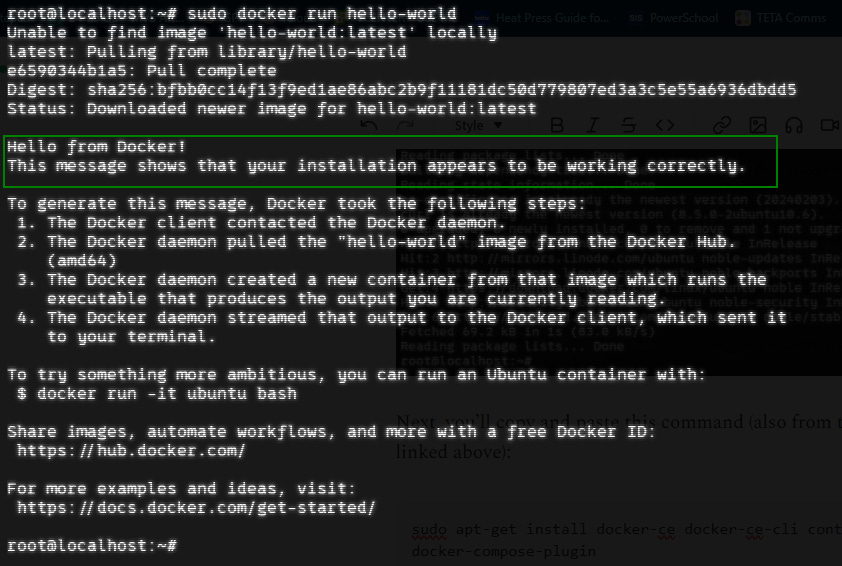

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginAfter that runs, you can test to make sure Docker is running with this command:

sudo docker run hello-worldIf successful, you should see:

Setting Up RustDesk Servers

Now that Docker is up and running, installing RustDesk will be SIMPLE. There is a documentation page available from RustDesk here that outlines the install process with Docker.

On your RustDesk server, we’re going to make a rustdesk directory where we’re going to put a yaml configuration file:

mkdir rustdesknow navigate to the directory:

cd rustdeskand create the yaml file:

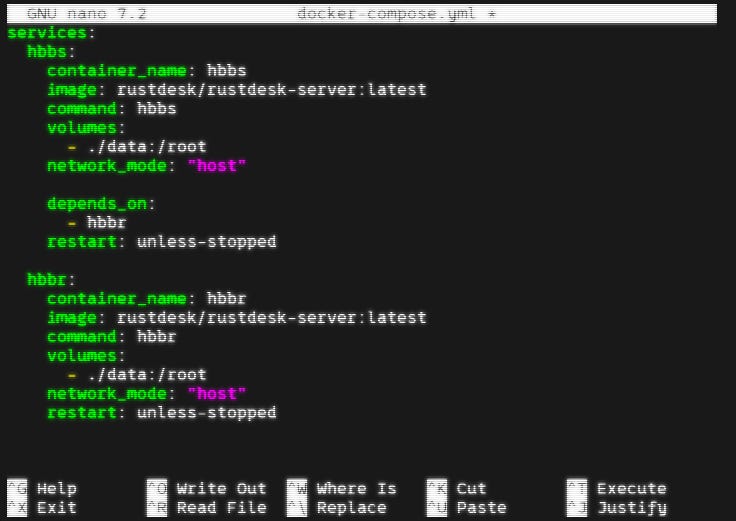

nano docker-compose.ymlThis will open a Nano window like below. Paste the following text into Nano, and then save and exit by hitting ctrl-X then Y then Enter.

services:

hbbs:

container_name: hbbs

image: rustdesk/rustdesk-server:latest

command: hbbs

volumes:

- ./data:/root

network_mode: "host"

depends_on:

- hbbr

restart: unless-stopped

hbbr:

container_name: hbbr

image: rustdesk/rustdesk-server:latest

command: hbbr

volumes:

- ./data:/root

network_mode: "host"

restart: unless-stopped

This yaml file is basically telling Docker to download the rustdesk-server image and spin up two containers for RustDesk - HBBS is the Signaling Server, and HBBR is the relay server.

To get Docker to create the containers, we need one last command:

docker compose up -dIn just a few seconds, the images should download and start:

Connecting the RustDesk Containers to the RustDesk Client

Now that we have the RustDesk clients downloaded and the RustDesk servers are running, we need some information to be able to link the two. While still SSHed into the virtual machine in the rustdesk directory, change directories to the data directory.

cd dataInside the data directory, run the ls command and there should be a .pub public key file. Run the cat command to view the key:

You will need to copy the part of the key that comes before your username. For the example above, the key is:

pPjgo1nKhvrIkPa5CkOf9mAcFXLjqWZFiHuvqrHH2Zo=You’ll also need the server’s IP address. If you are using a Linode server, it’s the same IP you used to initiate your SSH session. If you’re not sure, you can run the ifconfig command to see what IP is attached to the eth0 interface

ifconfigNow that you have the public key and the IP address, we’re ready to connect the server and the clients.

Configuring the Clients

Open the client and click on the 3 dots next to the ID:

Then, select Network → ID/Relay Server

Next, enter the IP address of your Docker server in the ID Server field and the Relay Server Field. Then, enter the public key in the Key field.

Repeat this process on any other devices that you will be remoting to or remoting from. This step tells the client to make connections through your RustDesk servers.

When finished, the bottom of your RustDesk screen should show this Ready indicator:

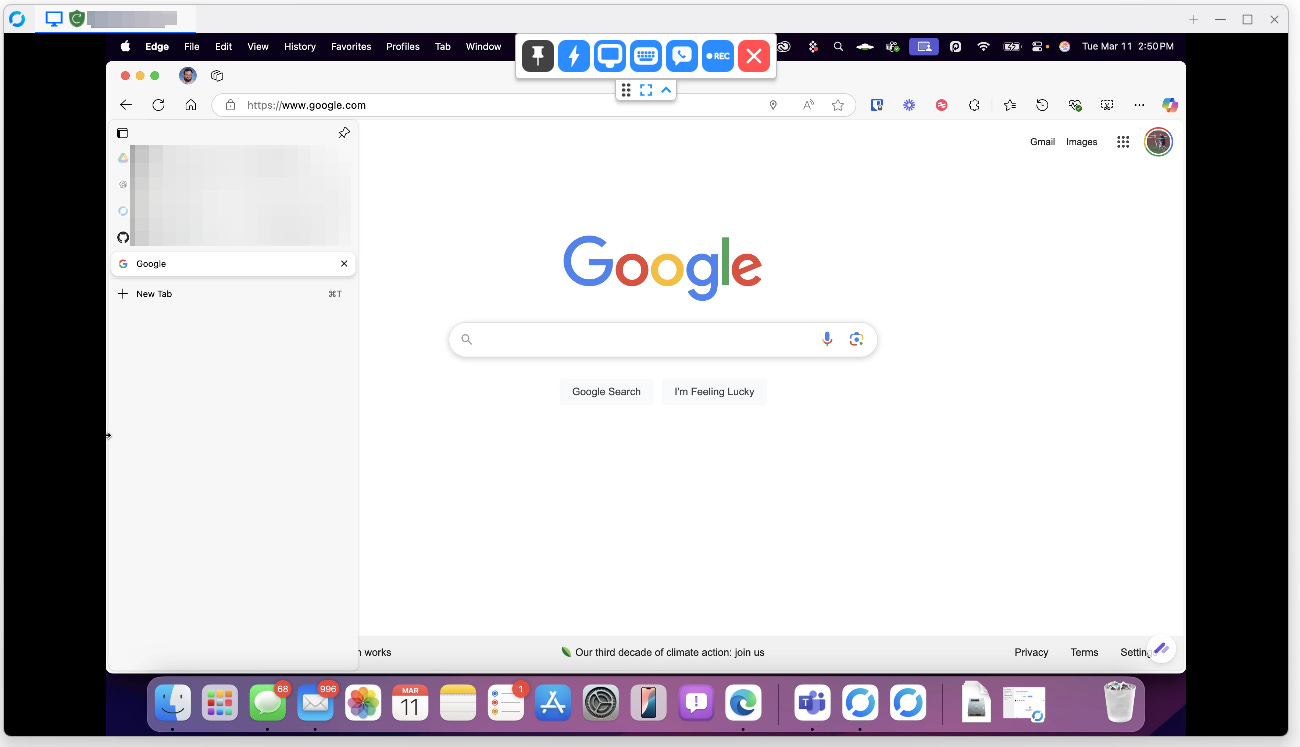

Take It for a Spin!

Once you have the clients configured, getting them to talk is relatively easy, and if you’ve used TeamViewer before, it will be very intuitive.

By default, each client has an area that displays an ID and a One-time password. To connect, simply enter the ID from the device you want to remotely access into the “Control Remote Desktop” box in RustDesk on the device you’re using to connect to the remote device.

When making the connection, you’ll be prompted to enter the RustDesk password for the device, which by default is the One-time password displayed in the RustDesk client, though you can optionally configure a persistent password that can be used for unattended access.

Now that the servers and clients are configured and connected, the next suggested step is to enable MFA.

Add MFA

To configure MFA, click on the 3 dots next to ID again, and this time select Security.

At the top, there will be a banner to click on to Unlock security settings. You’ll be prompted for admin rights to be able to access the settings.

Once Security settings are unlocked, scroll down to 2FA and check the box.

You’ll then be prompted to set up MFA using any standard authenticator app:

Other Notable Features to Explore:

Session Recording

Under the “General” settings tab, you can configure RustDesk to automatically record all incoming sessions and/or all outgoing sessions. There is also a button to record on-the-fly at the top of the RustDesk menu bar.

File Transfer

If you only need to send some files to the computer, you can do so without fully connecting by selecting “Transfer file” instead of “Connect”:

Direct IP Access

If all the devices you’re managing are on the same LAN, and you want to avoid setting up a server, there is an option for direct connection between devices. To enable, go to Settings → Security and check the box for Enable Direct IP Access and assign a port number. Do this on all applicable clients.

End-to-End Encryption

There’s nothing to configure here, but when RustDesk is deployed as described here, communication is end-to-end encrypted by default, with no additional configuration needed.